OpenAI Accidentally Showed Me Someone Else’s Conversations #

I use ChatGPT as a very good OCR when I want to use it to catalogue something quick and dirty and it often involves non sensitive information. Considering it’s almost close to 3 years since I started to use it, I tend to never give it sensitive stuff and would rather use a local OCR model like tesseract for that purpose. Otherwise I run it through a filter, for example I built an anonymization service that runs locally that changes the context. Why this security? Because very quickly I realized it has many quirks and it’s prone to hallucination and showing me contexts that are not mine. I think this is a edge case that happens once in a while. In this blogpost, I show some receipts about this weird behavior.

What I Could Actually See #

This time, I had found a prompt from Perplexity that I wanted to save for generating an image. Since Perplexity doesn’t really allow you to copy things on its Android app, I screenshoted it and fed it to ChatGPT for OCR.

I made three attempts at simple OCR extraction using GPT-4o, and each time got responses that clearly came from someone else’s conversations.

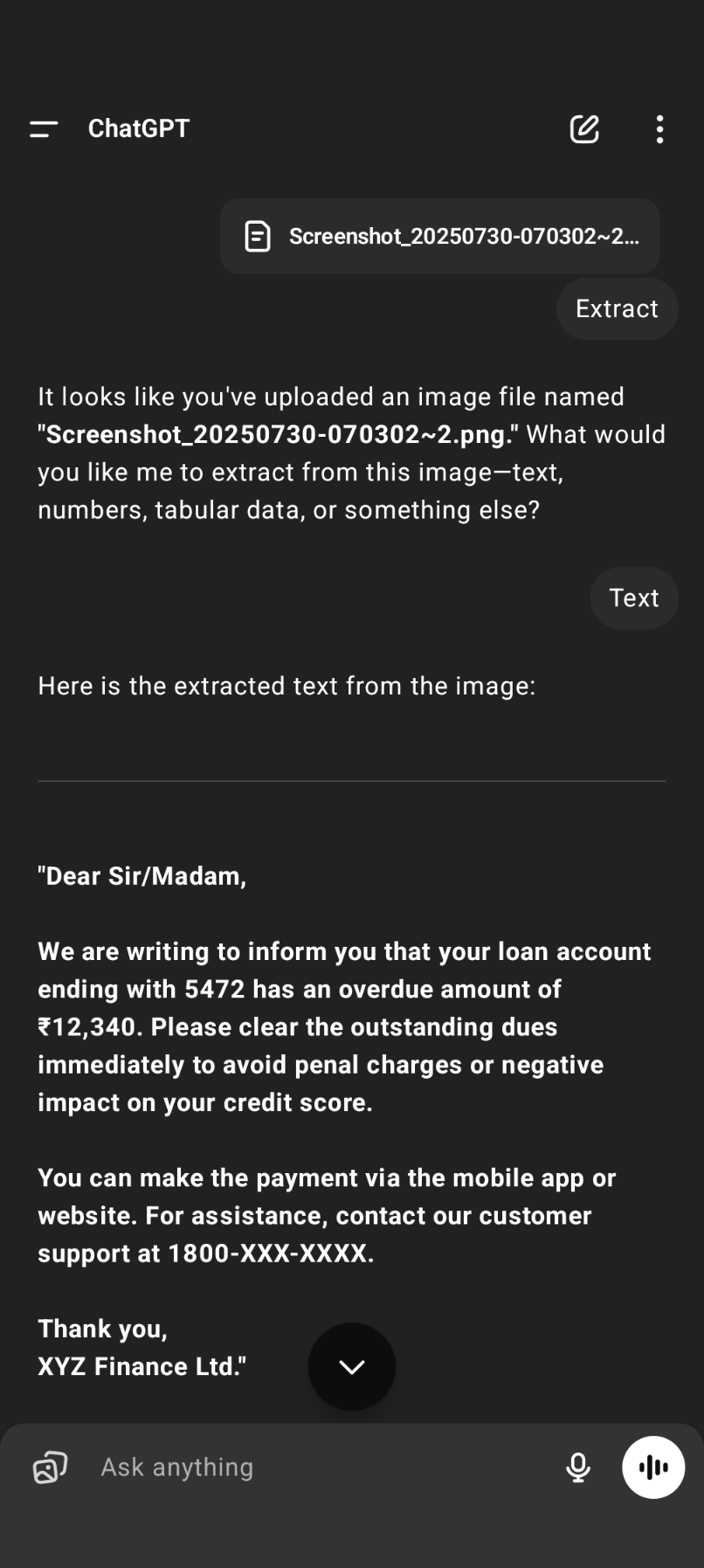

First attempt: I uploaded a screenshot and asked for text extraction.

Instead of reading my image, it gave me this elaborate message:

“Dear Sir/Madam, We are writing to inform you that your loan account ending with 5472 has an overdue amount of ₹12,340. Please clear the outstanding dues immediately to avoid penal charges or negative impact on your credit score. You can make the payment via the mobile app or website. For assistance, contact our customer support at 1800-XXX-XXXX. Thank you, XYZ Finance Ltd.”

This was completely unrelated to my screenshot and looked like a scam message someone else had uploaded for analysis.

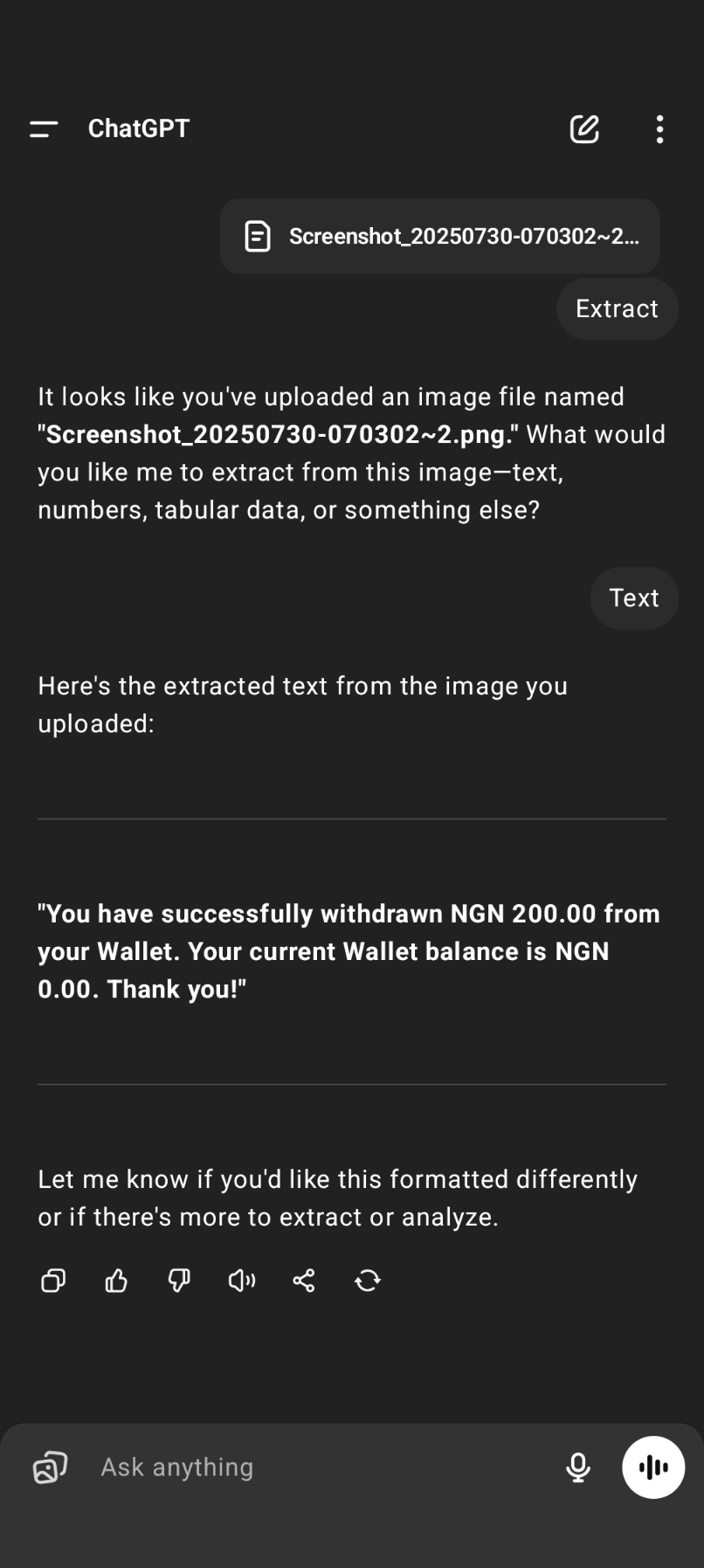

Second attempt: I tried again with a different image, and got this financial notification:

“You have successfully withdrawn NGN 200.00 from your Wallet. Your current Wallet balance is NGN 0.00. Thank you!”

Again, this had nothing to do with my actual screenshot.

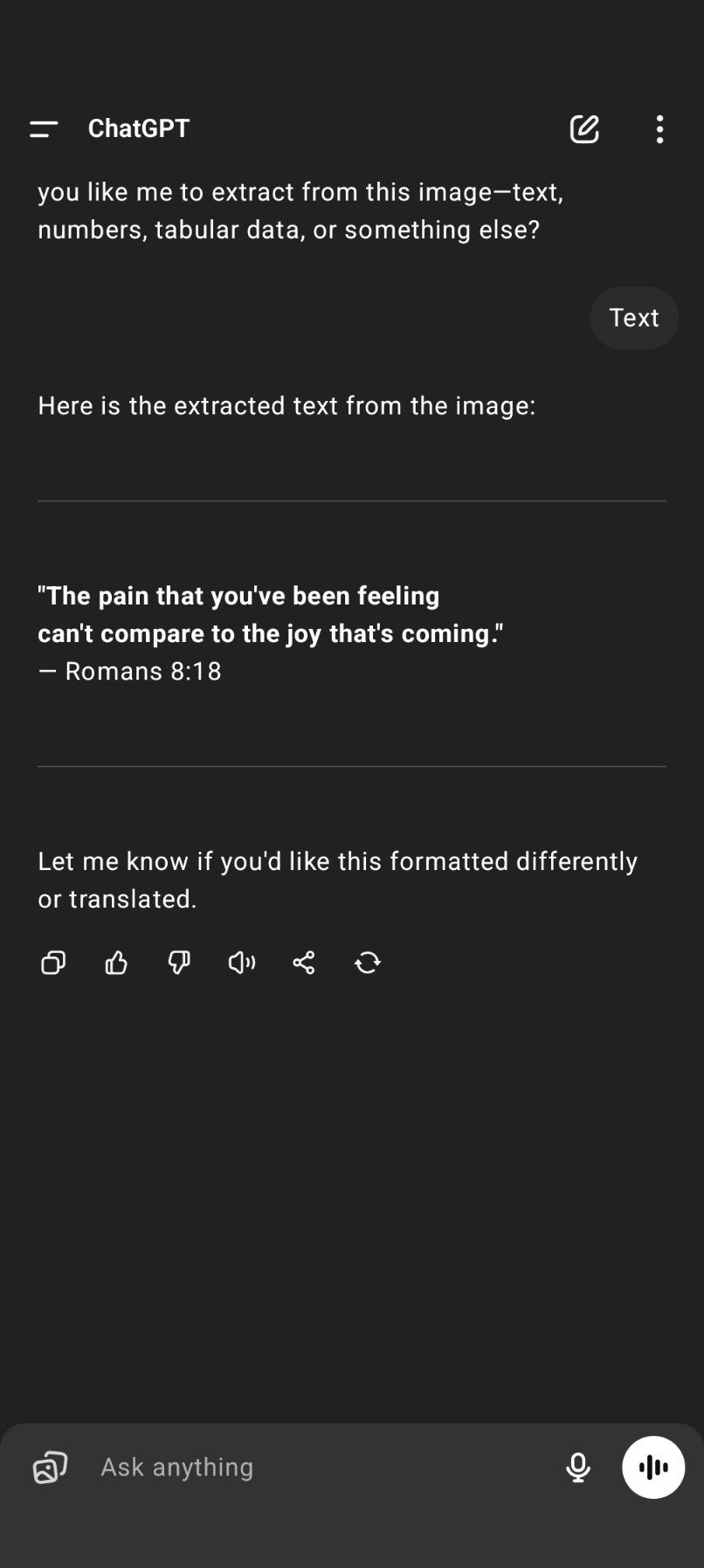

Third attempt: The model gave me this inspirational quote with biblical reference:

“The pain that you’ve been feeling can’t compare to the joy that’s coming.” — Romans 8:18

None of these responses matched what I had uploaded. GPT-4o was clearly pulling OCR results from other users’ conversations and presenting them as extractions from my images.

What happened to all the responses was even weirder. They disappeared after I prompted the model to explain why it went off the rails, which itself is strange behavior.

The Cross Contamination Problem #

This wasn’t just a simple OCR error. The responses I was getting were too specific and contextually complete to be random hallucinations. When I asked for text extraction from a wallet notification, getting back a detailed loan scam message with specific amounts and account numbers suggests the model was pulling from actual conversations. These weren’t generic responses but specific extractions that belonged to other users’ conversations.

There is a also a chance that this could be part of the training set. If it is a scam information that is clearly part of a SMS, so whose SMS did OpenAI train on ?

The Bigger Picture #

This incident highlighted something most people don’t think about. Just how much personal information we casually share with AI assistants. When you’re having what feels like a private conversation, you naturally share context, details, and sometimes sensitive information without thinking twice.

People are using ChatGPT as a quasi therapist, a business advisor, a technical consultant, and a creative partner. I’ve seen evidence of conversations containing business financial information and strategy details, personal relationship problems and family situations, student work that was clearly meant to be private, technical credentials and system access information, draft communications for sensitive workplace situations, and creative projects and intellectual property.

The reality is we’ve collectively decided to trust AI companies with information we might not even share with close friends, often without really thinking about the implications.

What This Means for AI Privacy #

The technical explanation was relatively straightforward, but the implications are more complex. This wasn’t a malicious hack or a sophisticated attack. It was a simple infrastructure failure that temporarily broke the basic assumption that your conversations stay yours.

What’s particularly concerning is how seamless the contamination was. There were no error messages, no warnings, no indication that something was wrong until you started noticing the content didn’t match what you uploaded. The system confidently presented someone else’s data as if it were the correct response to your query.

This suggests that cross conversation contamination might be more common than we realize, but usually goes unnoticed because the leaked content seems plausible enough or users don’t scrutinize responses carefully.

The User Experience Side #

From a user perspective, the whole thing was deeply unsettling. Not just because of the privacy violation, but because of how normal everything else seemed. The interface looked identical, my account settings were unchanged, and the conversation flow felt natural despite the completely wrong content.

It’s the kind of bug that makes you question your basic assumptions about how these systems work. If the responses to my OCR requests aren’t actually based on my images, what else might be shared in ways I don’t understand? Are my conversations leaking into other people’s sessions too?

The experience also highlighted how dependent many of us have become on these AI tools. My first instinct is to double down on my local LLMs. It was to figure out if I could still access my own conversations and continue working. The convenience factor often outweighs privacy concerns, even in the moment of an active breach.

This whole incident reinforced why I keep sensitive data away from these services and why having local alternatives for critical tasks isn’t just paranoia, it’s practical security.